March 13, 2025

October 14, 2025

In today’s data ecosystem, the term table has taken on a wide range of meanings. As the number of tools using table-based methodologies continues to grow, so do the interpretations of what a table actually is. At their core, Tables in Snowflake, Postgres, BigQuery, and Databricks all share a common essence where they store tabular, structured data. But beyond that, they diverge in countless ways.

Tabsdata Tables are no exception. In this post, we’ll dig into how Tabsdata redefines what a table can be, and the ways it differs from the conventional database concept.

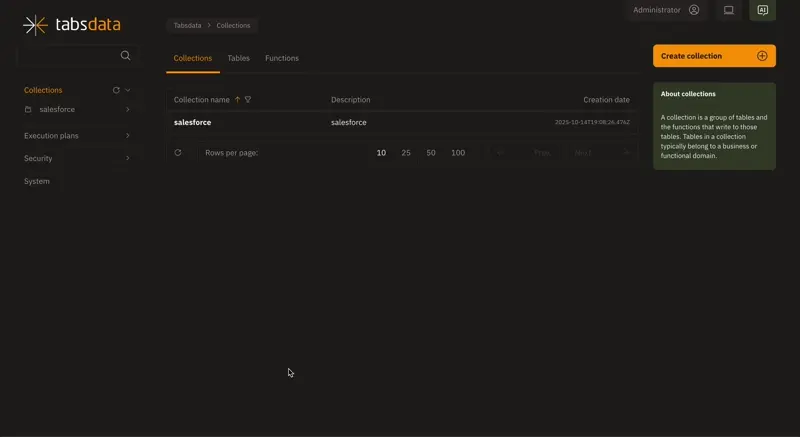

Tabsdata is a declarative data integration tool built around a pub/sub for tables methodology. You deploy the Tabsdata server into an environment of your choosing and build workflows using Tabsdata Functions and Tabsdata Tables, where functions move data into and out of tables, while tables persistently store that data.

A core concept of Tabsdata is that Tables are the unit of operation. Data producers publish data into Tabsdata Tables and then external systems can subscribe to the data in those tables. These tables are persistently stored within Tabsdata, and provide a host of benefits such as enhanced semantic and metadata preservation, improved data quality, automated lineage and provenance, and enhanced transparency.

In traditional SQL systems, loading data into a table usually means either appending new rows onto existing data or replacing the table’s contents entirely. This approach creates a tradeoff: you get fresh data, but at the cost of visibility into how that data looked at earlier points in time.

This is where Tabsdata Tables differ. Whenever a function runs, it commits data into one or more tables. When data is committed into a Tabsdata Table, Tabsdata creates an immutable version of that data and stores it as part of the Table’s version history. Each version exists independently, preserved alongside all previous and future versions of your table data.

Every time a function like a Publisher is invoked, it creates a new version at the head of your table’s lineage.

This difference in methodology produces several powerful features unique to tabsdata

Tabsdata Tables don’t require predefined schemas. When you commit data, Tabsdata automatically infers and creates the schema for that version. This allows each version within a table to have its own schema, meaning your table can evolve in a way that reflects how your source data naturally changes over time.

You may not always have control over how and why your source data is changing, but that doesn’t mean you can’t be responsive when it does inevitably change.

Since Tabsdata infers schema directly from your data, you don’t have to recast or reshape incoming datasets. This keeps your stored data more faithful to its source and minimizes transformation-induced distortion.

Every table version is immutable and fully accessible from the UI or CLI. You can view both the schema and the data from any version, effectively allowing you to time travel to any point in your data’s history. Each version is stored alongside its execution and function context, so you not only see the data itself but also the exact code that produced it.

This allows you to answer important business questions like:

Ever wonder where a specific value came from? Tabsdata tracks every cell with its upstream lineage, letting you trace values all the way back through the operations that generated them.

State Management is automatic. You don’t have to explicitly cache intermediate datasets or worry about losing access to your data after your execution finishes running. Any table commits are accessible via the UI or CLI.

In a traditional ETL DAG architecture, each step’s dependencies must be manually defined, requiring the data engineer to imperatively specify how and when each part of the workflow should run. This adds a layer of complexity that introduces the risk of fragility and breakage.

In Tabsdata, functions operate under a simple concept: trigger when a table they depend on gets new data. This means you don't have to define explicit task dependencies or data flow logic because your tables are the data flow

This also means you don’t need to create jobs or manage runs manually. Just register your functions, and they automatically run when the tables they depend on get new data.

Data Integration is naturally complex. Every time data is passed from one system to another, there is bound to be some level of data loss or degradation. Until now, that has just been a reality we accept because there has not been a better solution.

Tabsdata's Table architecture is an innovative solution to this problem. So much of the data we work with is tabular, so by making tables the unit of operation, Tabsdata enables data to propagate with a richer representation of its source’s context, capturing metadata, semantics, lineage, and more.

This not only provides enhanced visibility and auditing capabilities for the user, but this enhanced context allows Tabsdata to automate and abstract tasks such as orchestration that until now have been imperatively handled by data engineers.